We develop statistically efficient methods for real-time and adaptive neuroscience experiments. We construct latent models from high-dimensional neural, behavioral, genetic, and other data as a form of simplification (learning underlying patterns) and as a means of combining data across modalities (multi-modal integration). These models are typically learned in a streaming setting, one data point at a time, to allow us to track changes in data dynamically across time or condition. We then also combine these with closed-loop optimization strategies for designing stimulations in real time, both external (visual) and internal (direct neural perturbations).

We develop new models of low-dimensional neural and behavioral data through manifold

construction and adaptation during diverse tasks or stimulus conditions. While many latent

space models are fit after a recording is over, we instead focus on the real-time domain to learn

ongoing latent dynamics as data are acquired, and to learn response maps for how these

dynamics are perturbed under arbitrary stimuli. We also develop new constrained optimization

methods to determine best high-dimensional stimulation patterns to drive neural dynamics in

latent spaces.

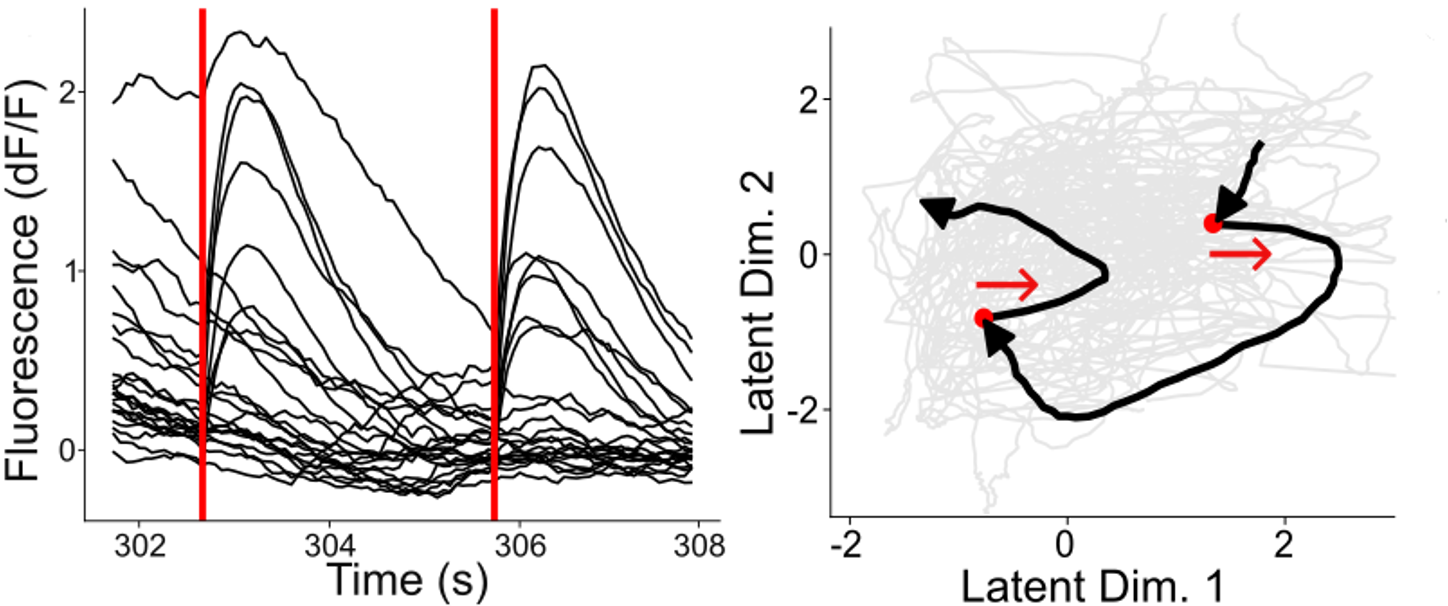

Figure: (Left) We simulate neural responses to stimulation events marked by red lines. (Right) These stimulation patterns drive latent neural dynamics along the first latent dimension.

We work on multimodal integration and probabilistic models to uncover latent factors across neural, genetic, and behavioral datasets.

In collaboration with the Kaczorowski Lab, we are developing new variational autoencoder architectures to identify novel factors underlying

cognitive resilience in Alzheimer’s disease. We then integrate optimized trajectory modeling techniques to propose novel interventions (genetic, environmental) based on these structured

latent spaces. See our recent publication here.

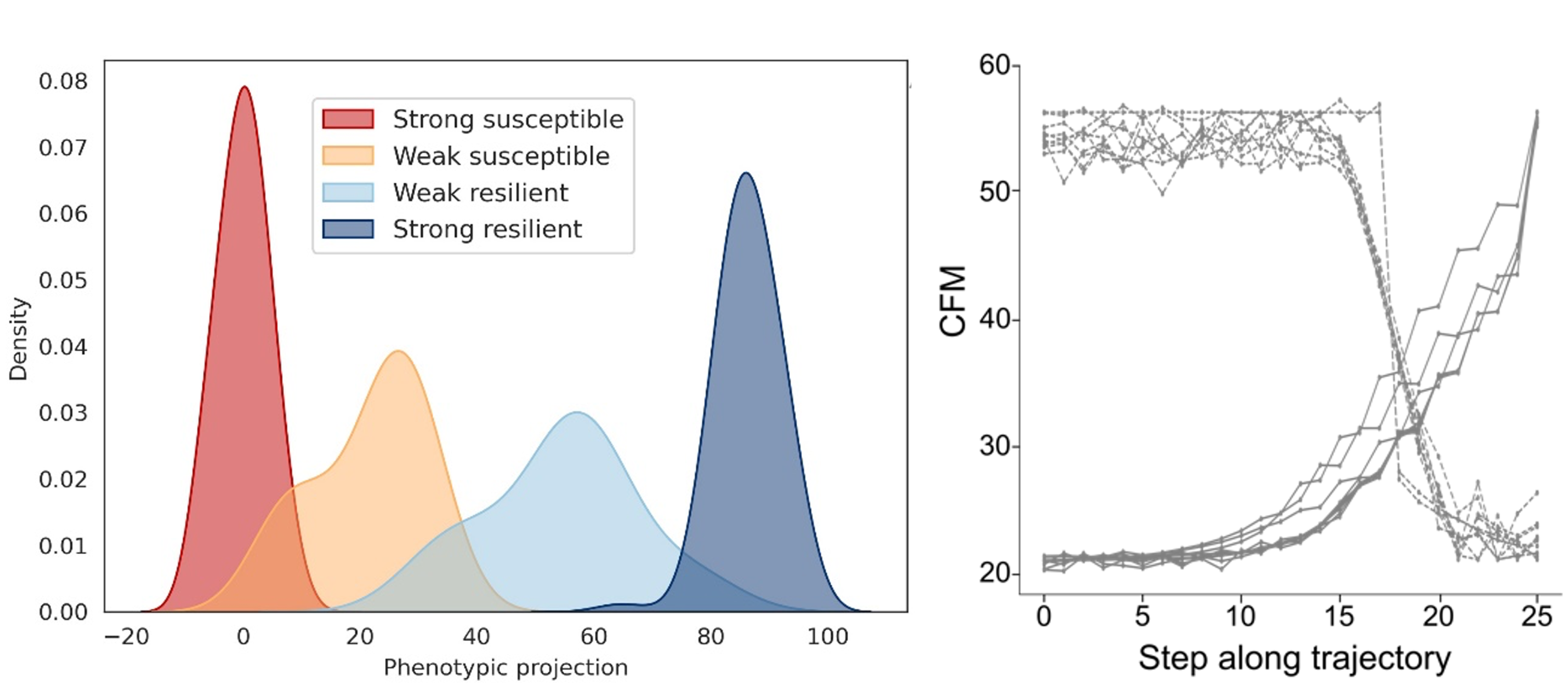

Figure: (Left) Distributions of data projected along a phenotypic axis in our learned latent space shows the spectrum of cognitive resilience. (Right) Cognitive metrics (CFM) can be continuously generated along a path from susceptible to resilient (solid) or vice versa (dashed).

To better model temporal patterns in neural activity as it relates to ongoing motor behavior, we

develop streaming algorithms through a probabilistic sequence decoding framework. Our goal is

to obtain latent dynamical models that can flexibly adapt to ongoing distributional shifts. Through

this framework, we aim to improve the stability or adaptability of BCI decoders across time and

task, thus reducing or eliminating the need for frequent re-calibration.

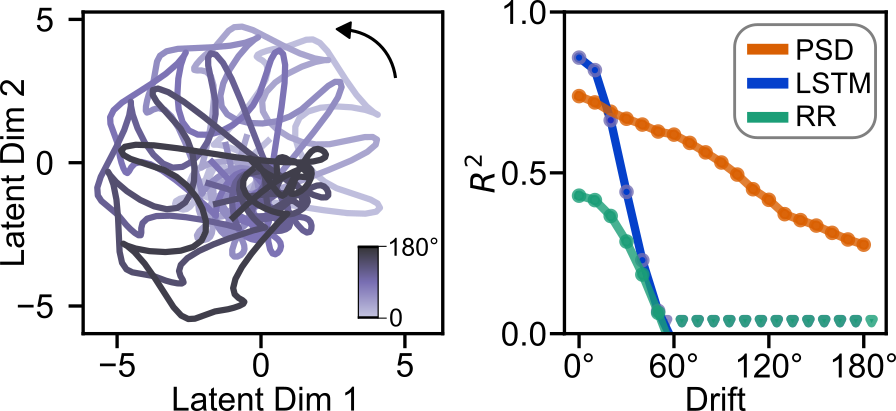

Figure: Simulated rotational drift in MC Maze experimental neural data. Performance of neural decoders goes down as a function of drift. Our algorithm PSD maintains performance by adapting to the changed neural dynamics.

We develop models for real-time analysis of signals for brain-computer interfaces that incorporate neural stimulations.

In collaboration with the Chestek Lab, we use markerless deep

learning based tracking to automatically extract positions and calculate joint positions of the

fingers of non-human primates. We use this system to replace physical devices like

manipulanda during brain-computer interface tasks to allow for freer movements and more

naturalistic behavior patterns. We also use this system to provide immediate feedback of joint

angles and finger and wrist positions during functional electrical stimulation (FES) to

automatically optimize high-dimensional stimulation patterns in real time.

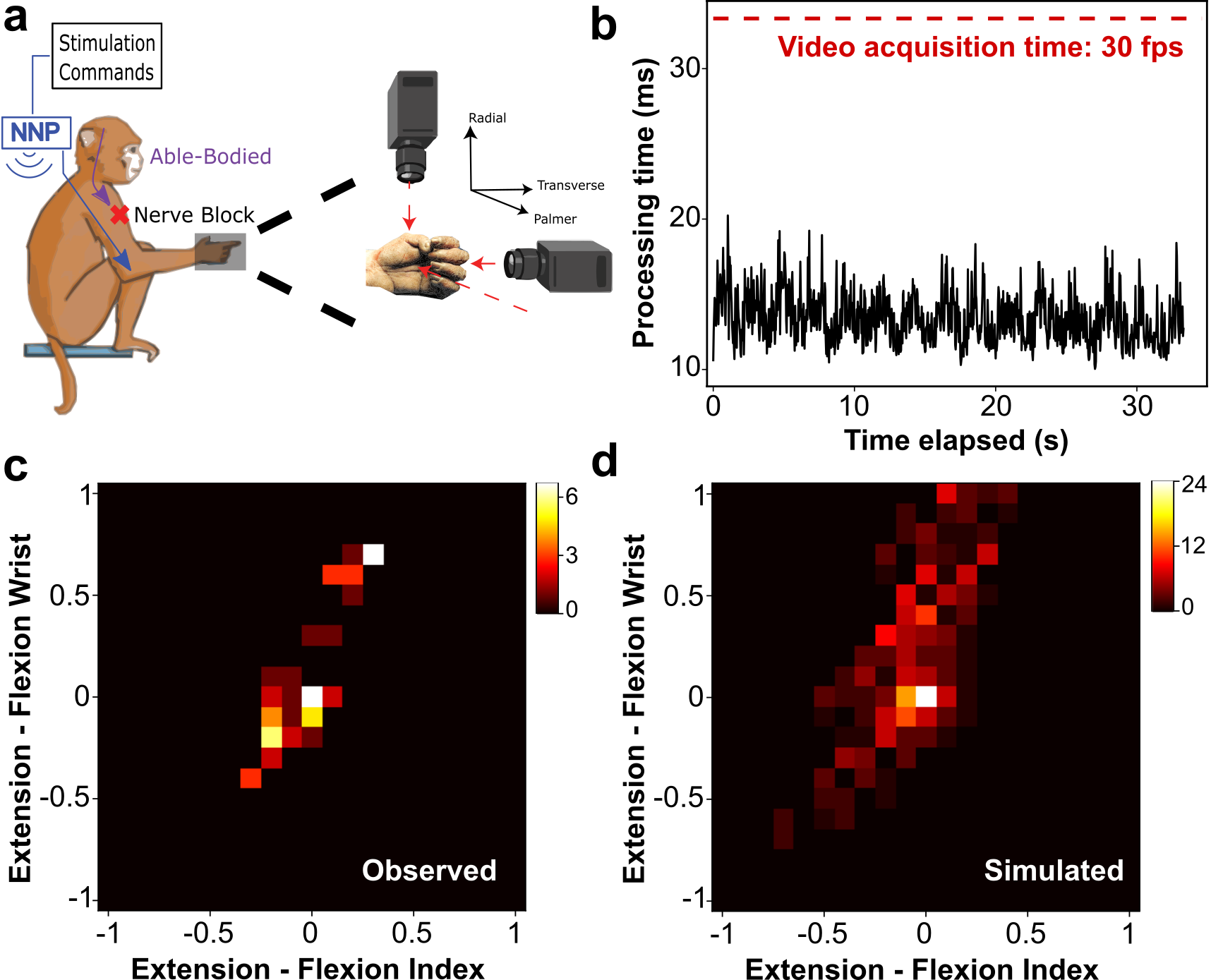

Figure: (Top) Experimental setup with multiple camera views. Processing speeds for inferring finger and wrist angles are faster than image acquisition. (Bottom) The map of reachable poses observed from user-selected stimulation patterns agrees with our simulated model.

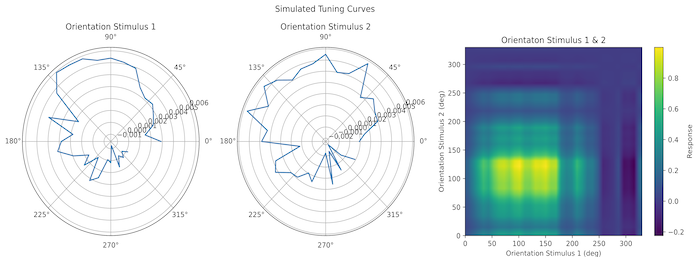

We apply machine learning and Bayesian Optimization techniques to estimate neural responses to high-dimensional visual stimuli in real-time.

This approach adaptively selects the next stimulus to test, potentially speeding up results exponentially. In collaboration with the Savier, Burgess,

and Naumann labs, we use our streaming software platform, improv (paper, Github) to run adaptive experiments where we can gain insights into

the current brain state in real-time and use this information to dynamically adjust an experiment

while data collection is ongoing. By bridging the gap between simplistic and complex stimulus

spaces, these methods could provide new insights into how sensory stimuli are represented in

the brains of behaving animals.

Figure: (Top) Example 2D slices from a 4-dimensional tuning curve of a V1 neuron. (Bottom) Processing speeds for image processing, tuning curve analysis, and optimization for our real-time system.

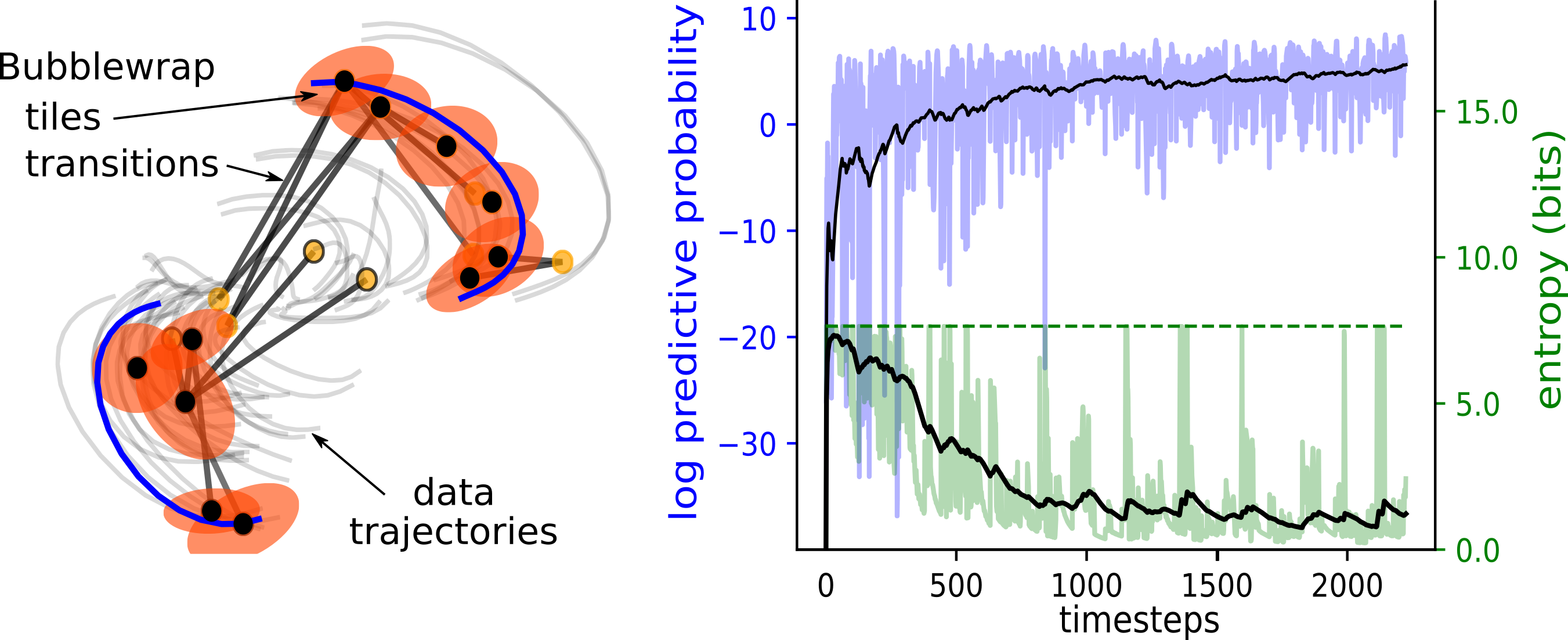

We developed a new method for approximating dynamics as a probability flow between discrete tiles on a low-dimensional manifold. The model can be trained quickly and retains predictive performance many time steps into the future, and is fast enough to serve as a component of closed-loop causal experiments in neuroscience. Our recent preprint on this work can be found here.

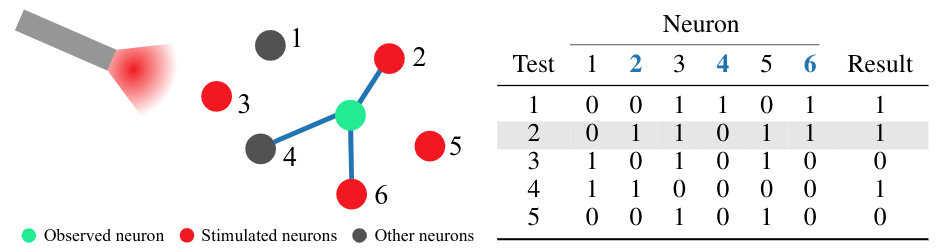

How can we get connectivity between large systems of neurons in vivo? Using stimulations of small ensembles and a statistical method called group testing, we show in our recent paper that this is now feasible even in networks of up to 10 thousand neurons.